Changes in accessing GPU on FRA1 1 Cloud EO-Lab

The following documentation describes the changes related to the implementation of a new GPU access mode in the FRA1-1 cloud.

The difference between GPU pass-through (PT) and vGPU modes

Until now, GPU instances have been launched in pass-through mode, which means that an entire physical GPU was directly assigned to one virtual machine (VM). Whole GPU is accessed exclusively by the NVIDIA driver running in the VM to which it is assigned. The GPU is not shared among VMs.

NVIDIA Virtual GPU (vGPU) enables multiple virtual machines to have simultaneous, direct access to a single physical GPU, using the same NVIDIA graphics drivers that are deployed on non-virtualized operating systems. By doing this, NVIDIA vGPU provides VMs with unparalleled graphics performance, compute performance, and application compatibility, together with the cost-effectiveness and scalability brought about by sharing a GPU among multiple workloads. This implementation is done using two virtualization modes and dedicated NVIDIA Grid drivers.

Introduced changes

Until now, users have had two flavors available for their instances based on NVIDIA A100 and RTX A6000 graphics cards, with the following configurations:

flavor name |

vCPU |

RAM |

disk |

|---|---|---|---|

gpu.a100 |

24 |

118 GB |

64 GB |

gpu.a6000 |

24 |

118 GB |

800 GB |

Both flavors were configured to access GPU in pass-through mode, so an entire physical GPU was assigned to one VM. It was additionally on the user’s part to configure the instance, which involved installing NVIDIA drivers and optional CUDA tools.

Due to the changes, new flavors are being introduced to the cloud. For better use of computing performance, instances based on vGPU flavors will have access to high-speed local SPDK storage based on NVMe disks.

A100

flavor name |

vCPU |

RAM |

disk |

|---|---|---|---|

vm.a100.1 |

1 |

14 GB |

40 GB |

vm.a100.2 |

3 |

28 GB |

40 GB |

vm.a100.3 |

6 |

56 GB |

80 GB |

vm.a100.7 |

12 |

112 GB |

80 GB |

A6000

RTX A6000 card operate in Time-Sliced virtualization mode. In Time-Sliced mode each instance receives its own separate slice of VRAM, while GPU time is shared between instances by the scheduler.

flavor name |

vCPU |

RAM |

disk |

|---|---|---|---|

vm.a6000.1 |

1 |

14 GB |

40 GB |

vm.a6000.2 |

3 |

28 GB |

40 GB |

vm.a6000.4 |

6 |

56 GB |

80 GB |

vm.a6000.8 |

12 |

112 GB |

80 GB |

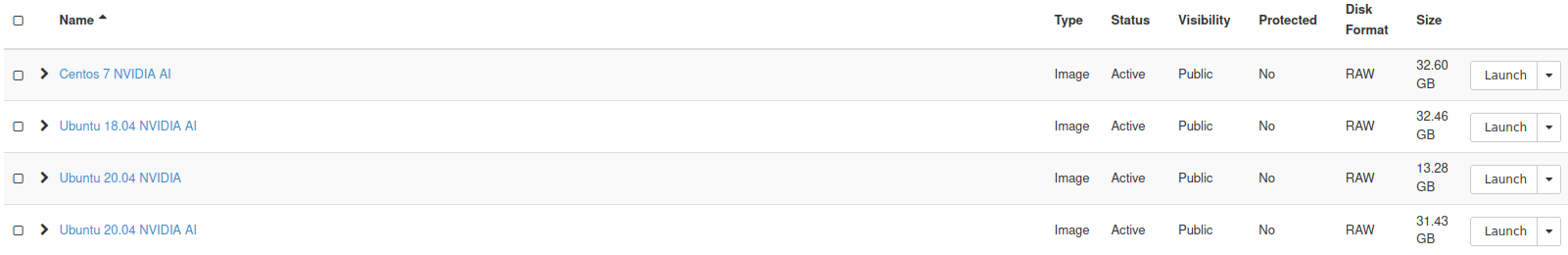

Images

In addition, preconfigured images have also been added.

All new NVIDIA images have dedicated drivers and CUDA tools preinstalled. NVIDIA AI images, moreover, include packages to support machine learning, such as Tensorflow, PyTorch, scikit-learn and R.

The use of the above images is mandatory when launching a new virtual machine with vGPU flavor. Otherwise, the virtual graphic card may not be recognised.

Steps to be taken after launching vGPU virtual machine

Connect to the vGPU virtual machine once it has been successfully launched.

To check the status of the vGPU device, enter the command:

nvidia-smi

The command output should provide information about the used GPU, current utilisation, running processes and driver and CUDA versions.

To check the vGPU software licence, call the command:

nvidia-smi -q | grep -i license

When a licence is active, the command output will display the corresponding status and expiry date.

root@vm01:# nvidia-smi -q | grep -i license

vGPU Software Licensed Product

License Status : Licensed (Expiry: 2022-10-20 12:40:45 GMT)

How to launch an environment with AI libraries on NVIDIA AI images

Currently installed package versions:

Python: 3.10.4

TensorFlow: 2.9.1

Pytorch: 1.11.0+cu102

scikit-learn: 1.1.1

R: 4.2.0

The launch of the ai-support environment is done by activating it with the command:

conda activate ai-support

Once the command has been executed, all tools are available.

Note

Please note that the packages in this environment have their own dependencies, which may conflict with other dependencies of packages currently installed on the virtual machine’s operating system. Due to dependencies between packages, you may, for example, experience the situation that in the CentOS 7 image the yum command will not work when the ai-support environment is active.

To avoid such conflicts, work carried out outside the environment should be preceded by a leave from the environment with a command:

conda deactivate

usermod -a -G conda username

Resources: